TryStack is a free way to try OpenStack. Its an OpenStack sandbox. That means TryStack is a testing environment only. You cannot use it for setting up your production servers. You can get a free account in TryStack by joining their Facebook group. As this is a testing facility there are several limitations in it. The server instances that you launch inside TryStack will be available for 24 hours only. You cannot upload your own server images to it, you can only select the available images in it.

After joining their Facebook group, you can start using TryStack with your Facebook login.

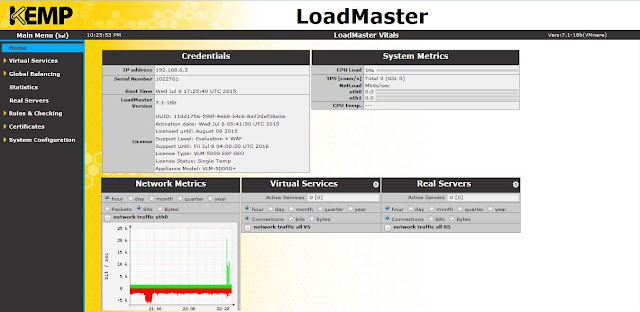

Once you login successfully, you will be able to view the OpenStack dashboard as shown below.

You will be able to create maximum of 3 instances, 6 VCPUs, 12 GB of RAM, 4 Floating IPs etc.

Before creating your first cloud VM, you need to do some initial configurations like creating a network, adding a router, defining security group etc. This is explained below.

Creating a network :

Click on create network tab, and enter a name, click next.

Enter network address with a subnet mask and gateway IP. Click next.

Enter the following details as shown below and click create.

A new network (192.168.10.0/24) named private is now created.

Now there should be a router to enable communication between your internal and external network. So click on create router tab. Enter a router name and click create router.

A router will be now created as shown below. Mouse over the router and you will get options to add an interface.

Click on add interface, select the private network that you have created earlier from the drop down menu and enter the details as shown below and click add interface button.

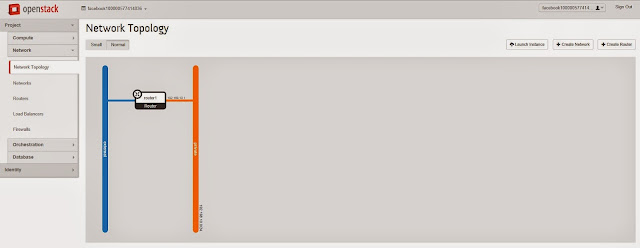

Now the router is connected to your private network through the interface 192.168.10.1

Next step is to connect the router to external network. Select the routers tab from the left hand side menu.

Click set gateway button, select external network and click set gateway.

Now, check your network topology and you can see that the internal and external network is connected with the router.

Next step is creating a security group and defining rules. Select access & security from the left hand side menu and then click on create security group.

Enter a name and description and click create.

Now, click manage rules tab of security group that you have just created (securitygroup1). If there is any rule which is already present, select all and delete them. Click add rule. You will get a window as shown below to add your custom rules.

The above rule just allows ingress HTTP traffic. Similarly we have to allow ingress as well as egress traffic for HTTP, HTTPS, SSH and ICMP. Once all the rules are added, it will look like the below screenshot.

Next you have to create a key pair.

Click on import key pair. Here I am using Putty key generator to generate the keys. Click generate button and a key pair will be generated. Save the private key locally in your machine. It will be a .ppk file. As shown in the below screenshot, copy the public key from Putty key generator and paste it in public key text field. Give a key pair name too.

Click import key pair and it will be generated as shown below.

Next step is allocating floating IPs.

Click allocate IP to project.

Click allocate IP and now a floating IP will be allocated.

Now you are all set to create your first instance. Select instances tab and then click launch instance.

Do not click launch now. Click on the next tab (access & security).

Select the key pair and security group that we have created earlier. Click launch. You have just created your first instance.

From the above screenshot, you can see that the VM has an internal IP address. Inorder to access this VM from public, you have to associate it with a floating IP. Follow the below screenshot.

Click associate floating IP.

Select IP address from the drop down menu and click associate. Now you can see that the floating IP is mapped to the private IP of the VM.

Status of your instance and network topology is shown below.

You can PING to your cloud instance.

You can SSH into your VM using Putty. Enter IP address of the VM.

Under connections tab, select data and enter username as fedora. This is the default user name for fedora21 cloud image.

Browse and select the private key file that you have saved locally in your machine and click open.

You are now successfully connected to your OpenStack cloud instance through SSH.

Final overview from your OpenStack dashboard is also shown below.

Thank you and all the best in creating your first OpenStack cloud instance. Cheers!