When it comes to troubleshooting application connectivity and name resolution issues in Kubernetes, having the right tools at your disposal can make all the difference. One of the most common challenges is accessing essential utilities like ping, nslookup, dig, traceroute, and more. To simplify this process, we've created a container image that packs a range of these utilities, making it easy to quickly identify and resolve connectivity issues.

The Container Image: A Swiss Army Knife for Troubleshooting

This container image, designed specifically for Kubernetes troubleshooting, comes pre-installed with the following essential utilities:

- ping: A classic network diagnostic tool for testing connectivity.

- dig: A DNS lookup tool for resolving domain names to IP addresses.

- nslookup: A network troubleshooting tool for resolving hostnames to IP addresses.

- traceroute: A network diagnostic tool for tracing the path of packets across a network.

- curl: A command-line tool for transferring data to and from a web server using HTTP, HTTPS, SCP, SFTP, TFTP, and more.

- wget: A command-line tool for downloading files from the web.

- nc: A command-line tool for reading and writing data to a network socket.

- netstat: A command-line tool for displaying network connections, routing tables, and interface statistics.

- ifconfig: A command-line tool for configuring network interfaces.

- route: A command-line tool for displaying and modifying the IP routing table.

- host: A command-line tool for performing DNS lookups and resolving hostnames.

- arp: A command-line tool for displaying and modifying the ARP cache.

- iostat: A command-line tool for displaying disk I/O statistics.

- top: A command-line tool for displaying system resource usage.

- free: A command-line tool for displaying free memory and swap space.

- vmstat: A command-line tool for displaying virtual memory statistics.

- pmap: A command-line tool for displaying process memory maps.

- mpstat: A command-line tool for displaying multiprocessor statistics.

- python3: A programming language and interpreter.

- pip: A package installer for Python.

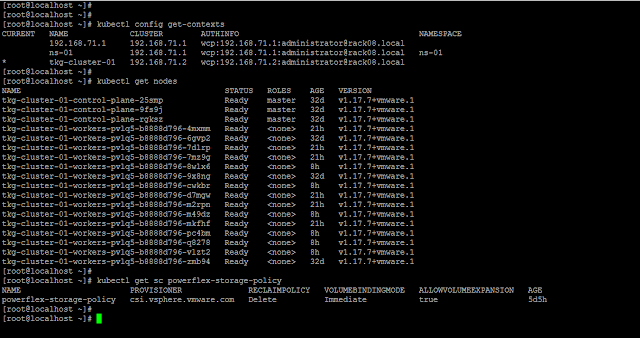

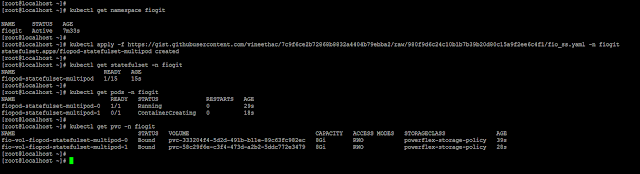

Run as a pod on Kubernetes

❯ kubectl run debug --image=vineethac/debug -n default -- sleep infinityExec into the debug pod

❯kubectl exec -it debug -n default -- bashroot@debug:/# ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_seq=1 ttl=46 time=49.3 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=45 time=57.4 ms 64 bytes from 8.8.8.8: icmp_seq=3 ttl=46 time=49.4 ms ^C --- 8.8.8.8 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2003ms rtt min/avg/max/mdev = 49.334/52.030/57.404/3.799 ms root@debug:/#

root@debug:/# nslookup google.com Server: 10.96.0.10 Address: 10.96.0.10#53 Non-authoritative answer: Name: google.com Address: 142.250.72.206 Name: google.com Address: 2607:f8b0:4005:80c::200e root@debug:/# exit exit ❯

Reference

https://github.com/vineethac/Docker/tree/main/debug-image

By having these essential utilities at your fingertips, you'll be better equipped to quickly identify and resolve connectivity issues in your Kubernetes cluster, saving you time and reducing the complexity of troubleshooting.

Hope it was useful. Cheers!