In the previous posts we discussed the following:

This article walks you though the steps to deploy an application on Tanzu Kubernetes Cluster (TKC) and how to access it. I will try to explain how this all works under the hood.

Here I have a TKC cluster as shown below:

% KUBECONFIG=gc.kubeconfig kg nodes

NAME STATUS ROLES AGE VERSION

gc-control-plane-pwngg Ready control-plane,master 49d v1.20.9+vmware.1

gc-workers-wrknn-f675446b6-cz766 Ready <none> 49d v1.20.9+vmware.1

gc-workers-wrknn-f675446b6-f6zqs Ready <none> 49d v1.20.9+vmware.1

gc-workers-wrknn-f675446b6-rsf6n Ready <none> 49d v1.20.9+vmware.1

% KUBECONFIG=gc.kubeconfig kg nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

gc-control-plane-pwngg Ready control-plane,master 49d v1.20.9+vmware.1 172.29.21.194 <none> VMware Photon OS/Linux 4.19.191-4.ph3-esx containerd://1.4.6

gc-workers-wrknn-f675446b6-cz766 Ready <none> 49d v1.20.9+vmware.1 172.29.21.195 <none> VMware Photon OS/Linux 4.19.191-4.ph3-esx containerd://1.4.6

gc-workers-wrknn-f675446b6-f6zqs Ready <none> 49d v1.20.9+vmware.1 172.29.21.196 <none> VMware Photon OS/Linux 4.19.191-4.ph3-esx containerd://1.4.6

gc-workers-wrknn-f675446b6-rsf6n Ready <none> 49d v1.20.9+vmware.1 172.29.21.197 <none> VMware Photon OS/Linux 4.19.191-4.ph3-esx containerd://1.4.6

01 Create a namespace

% KUBECONFIG=gc.kubeconfig k create ns webserver

namespace/webserver created

% KUBECONFIG=gc.kubeconfig kg ns

NAME STATUS AGE

default Active 48d

kube-node-lease Active 48d

kube-public Active 48d

kube-system Active 48d

vmware-system-auth Active 48d

vmware-system-cloud-provider Active 48d

vmware-system-csi Active 48d

webserver Active 10s

02 Deploy nginx application

Following is the nginx-deployment.yaml spec to deploy nginx application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

run: my-nginx

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

You can apply the yaml file as below:

% KUBECONFIG=gc.kubeconfig k apply -f nginx-deployment.yaml -n webserver

deployment.apps/my-nginx created

% KUBECONFIG=gc.kubeconfig kg deploy -n webserver

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 0/2 0 0 3m3s

% KUBECONFIG=gc.kubeconfig kg events -n webserver

LAST SEEN TYPE REASON OBJECT MESSAGE

26s Warning FailedCreate replicaset/my-nginx-74d7c6cb98 Error creating: pods "my-nginx-74d7c6cb98-" is forbidden: PodSecurityPolicy: unable to admit pod: []

3m10s Normal ScalingReplicaSet deployment/my-nginx Scaled up replica set my-nginx-74d7c6cb98 to 2

You can see that the pods failed to get created due to PodSecurityPolicy. Following is the psp.yaml spec to create ClusterRole and ClusterRoleBinding.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: psp:privileged

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames:

- vmware-system-privileged

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: all:psp:privileged

roleRef:

kind: ClusterRole

name: psp:privileged

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:serviceaccounts

apiGroup: rbac.authorization.k8s.io

Apply the yaml file as shown below:

% KUBECONFIG=gc.kubeconfig k apply -f psp.yaml

clusterrole.rbac.authorization.k8s.io/psp:privileged created

clusterrolebinding.rbac.authorization.k8s.io/all:psp:privileged created

Now, in few minutes you can see the deployment will get successful and two nginx pods will get deployed in the webserver namespace.

% KUBECONFIG=gc.kubeconfig kg deploy -n webserver

NAME READY UP-TO-DATE AVAILABLE AGE

my-nginx 2/2 2 2 80m

% KUBECONFIG=gc.kubeconfig kg pods -n webserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-74d7c6cb98-lzghr 1/1 Running 0 67m 192.168.213.132 gc-workers-wrknn-f675446b6-rsf6n <none> <none>

my-nginx-74d7c6cb98-s59dt 1/1 Running 0 67m 192.168.67.196 gc-workers-wrknn-f675446b6-f6zqs <none> <none>

03 Access the application

You can access the application in many ways depending on the usecase.

---Port-forward---

% KUBECONFIG=gc.kubeconfig kubectl port-forward deployment/my-nginx -n webserver 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

The deployment is port-forwarded now. If you open another terminal and do curl localhost:8080, you can see the nginx webpage.

% curl localhost:8080

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

You can also open a web browser with http://localhost:8080/ and you will get the same nginx webpage. Well port-forwarding is fine in a local dev test scenario, but you might not want to do it in a production setup. You will need to create a service that connects the application and to access it.

Services

There are 3 types of services in Kubernetes.

- NodePort: Similar to port forwarding where a port on the worker node will be forwarded to the target port of the pod where the application is running.

- ClusterIP: This is useful if you want to access the application from within the cluster.

- LoadBalancer: This is used to provide access to external users. In my case, NSX-T will be providing this access.

---Service NodePort---

Following is the yaml spec file for service of type nodeport:

% cat nginx-service-np.yaml

apiVersion: v1

kind: Service

metadata:

name: my-nginx

labels:

run: my-nginx

spec:

type: NodePort

ports:

- targetPort: 80

port: 80

protocol: TCP

selector:

run: my-nginx

Apply the above yaml file.

% KUBECONFIG=gc.kubeconfig k apply -f nginx-service-np.yaml -n webserver

service/my-nginx created

% KUBECONFIG=gc.kubeconfig kg svc -n webserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-nginx NodePort 10.111.182.155 <none> 80:30741/TCP 4s

% KUBECONFIG=gc.kubeconfig kg ep -n webserver

NAME ENDPOINTS AGE

my-nginx 192.168.213.132:80,192.168.67.196:80 32m

As you can see, a service (my-nginx) of type NodePort is created. And, now the application should be accessible on port 30741 of any worker node. To verify it, first we need connectivity to the worker node IP. For connecting to worker nodes, we need to have a jumpbox pod deployed on the supervisor namespace. Once we have a jumpbox pod deployed on the sv namespace, we can ssh to TKC nodes from the jumpbox pod. You can follow my previous post to see how to create a jumpbox pod. Here is the link to VMware documentation for how to SSH to TKC nodes.

% KUBECONFIG=sv.kubeconfig k exec -it jumpbox -- sh

sh-4.4#

sh-4.4# curl 172.29.21.197:30741

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

sh-4.4#

---Service ClusterIP---

Service of type ClusterIP will be accessible within the TKC. So, I will need to deploy a jumpbox pod/ test pod within the TKC and connect from there. First let me edit the svc my-nginx from NodePort to type ClusterIP.

% KUBECONFIG=gc.kubeconfig k edit svc my-nginx -n webserver

service/my-nginx edited

% KUBECONFIG=gc.kubeconfig kg svc -n webserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-nginx ClusterIP 10.111.182.155 <none> 80/TCP 39m

I have already deploy a pod inside the TKC. As you can see, dnsutils is the pod that is deployed in the default namespace. We will connect to this pod and from there we can curl to the Cluster-IP of my-nginx service.

% KUBECONFIG=gc.kubeconfig kg pods

NAME READY STATUS RESTARTS AGE

dnsutils 1/1 Running 1 105m

% KUBECONFIG=gc.kubeconfig k exec -it dnsutils -- sh

#

# curl 10.111.182.155:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

#

Note: This service of type ClusterIP can be accessed only within the TKC, and not externally!

---Service LoadBalancer---

This is the way to expose your service to external users. In this case NSX-T will provide the external IP which will then internally forwarded to nginx pods through the my-nginx service.

I have edited the service my-nginx from type ClusterIP to LoadBalancer.

% KUBECONFIG=gc.kubeconfig k edit svc my-nginx -n webserver

service/my-nginx edited

% KUBECONFIG=gc.kubeconfig kg svc -n webserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-nginx LoadBalancer 10.111.182.155 <pending> 80:32398/TCP 56m

% KUBECONFIG=gc.kubeconfig kg svc -n webserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-nginx LoadBalancer 10.111.182.155 10.186.148.170 80:32398/TCP 56m

You can see that now the service has got an external ip. And, the end points of the service are as shown below, which is basically the nginx pod IPs.

% KUBECONFIG=gc.kubeconfig kg ep -n webserver

NAME ENDPOINTS AGE

my-nginx 192.168.213.132:80,192.168.67.196:80 58m

% curl 10.186.148.170

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

I could also use the external IP 10.186.148.170 in a web browser to access the nginx webpage.

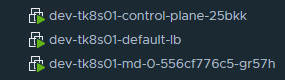

Now lets have a look at what is in the supervisor namespace. This TKC is created under a supervisor namespace "vineetha-test04-deploy".

% kubectl get svc -n vineetha-test04-deploy

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gc-ba320a1e3e04259514411 LoadBalancer 172.28.5.217 10.186.148.170 80:31143/TCP 40h

gc-control-plane-service LoadBalancer 172.28.9.37 10.186.149.120 6443:31639/TCP 51d

% kubectl get ep -n vineetha-test04-deploy

NAME ENDPOINTS AGE

gc-ba320a1e3e04259514411 172.29.21.195:32398,172.29.21.196:32398,172.29.21.197:32398 40h

gc-control-plane-service 172.29.21.194:6443 51d

So what you are seeing is, for a service of type loadbalancer created inside the TKC, a service of type loadbalancer (gc-ba320a1e3e04259514411) will be automatically created under the supervisor namespace, and the its endpoints are the IP address of TKC worker nodes.

On the NSX-T side you can see the LB for my supervisor namespace, virtual servers in it, and server pool members in the virtual server.

I hope it was useful. Cheers!

.jpeg)