In the previous posts we discussed the following:

Now that we have enabled workload management, the next step is to create namespaces on the supervisor cluster, set resource quotas as per requirements, and then the vSphere administrator can provide access to developers to these namespaces, and they can either deploy Tanzu Kubernetes clusters or VMs or vSphere pods.

- Select the cluster and provide a name for the namespace.

- Now the namespace is created successfully. Before handing over this namespace to the developer, you can set permissions, assign storage policies, and set resource limits.

Let's have a look at the NSX-T components that are instantiated when we created a new namespace.

- A new segment is now created for the newly created namespace. This segment is connected to the T1 Gateway of the supervisor cluster.

- A SNAT rule is also now in place on the supervisor cluster T1 Gateway. This helps the Kubernetes objects residing in the namespace to reach the external network/ internet. It uses the egress range 192.168.72.0/24 that we provided during the workload management configuration for address translation.

We can now assign a storage policy to this newly created namespace.

- Click on Add Storage and select the storage policy. In my case, I am using Tanzu Storage Policy which uses a vsanDatastore.

Let's apply some capacity and usage limits for this namespace. Click edit limits and provide the values.

Let's set user permissions to this newly created namespace. Click add permissions.

Now we are ready to hand over this new namespace to the dev user (John).

Under the first tile, you can see copy link, you can provide this link to the dev user. And he can open it in a web browser to access the CLI tools to connect to the newly created namespace.

Download and install the CLI tools. In my case, CLI tools are installed on a CentOS 7.x VM. You can also see the user John has connected to the newly created namespace using the CLI.

The user can now verify the resource limits of the namespace using kubectl.

You can see the following limits:

- cpu-limit: 21.818

- memory-limit: 131072Mi

- storage: 500Gi

Storage is limited at 500 GB and memory at 128 GB which is very straightforward. We (vSphere admin) had set the CPU limits to 48 GHz. And here what you see is cpu-limit of this namespace is limited to 21.818 CPU cores. Just to give some more background on this calculation, the ESXi host that I am using for this study has 20 physical cores, and the total CPU capacity of a host is 44 GHz. I have 4 such ESXi hosts in the cluster. Now, the computing power of one physical core is (44/ 20) = 2.2 GHz. So, in order to limit the CPU to 48 GHz, the number of cpu core should be limited to (48/ 2.2) = 21.818.

Apply the following cluster definition yaml file to create a Tanzu Kubernetes cluster under the ns-01-dev-john namespace.

apiVersion: run.tanzu.vmware.com/v1alpha1

kind: TanzuKubernetesCluster

metadata:

name: tkg-cluster-01

namespace: ns-01-dev-john

spec:

topology:

controlPlane:

count: 3

class: guaranteed-medium

storageClass: tanzu-storage-policy

workers:

count: 3

class: guaranteed-xlarge

storageClass: tanzu-storage-policy

distribution:

version: v1.18.15

settings:

network:

services:

cidrBlocks: ["198.32.1.0/12"]

pods:

cidrBlocks: ["192.1.1.0/16"]

cni:

name: calico

storage:

defaultClass: tanzu-storage-policy

Login to the Tanzu Kubernetes cluster directly using CLI and verify.

You can see corresponding VMs in the Center UI.

Now, let's have a look at the NSX-T side.

- A Tier-1 Gateway is now available with a segment linked to it.

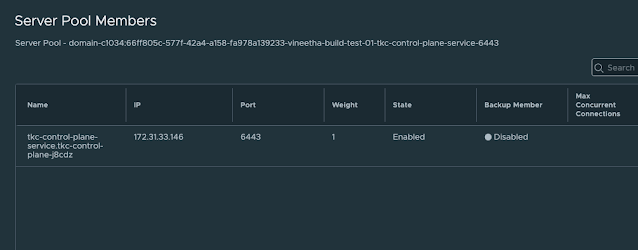

- You can see a server load balancer with one virtual server that provides access to KubeAPI (6443) of the Tanzu Kubernetes cluster that we just deployed.

- You can also find a SNAT rule. This helps the Tanzu Kubernetes cluster objects to reach the external network/ internet. It uses the egress range 192.168.72.0/24 that we provided during the workload management configuration for address translation.

Note: This architecture is explained on the basis of vSphere 7 U1. In the newer versions there are changes. With vSphere 7 U1c the architecture changed from a per-TKG cluster Tier 1 Gateway model to a per-Supervisor namespace Tier 1 Gateway model. For more details, feel free to refer the

blog series published by

Harikrishnan T @hari5611.

In the next part we will discuss monitoring aspects of vSphere with Tanzu environment and Tanzu Kubernetes clusters. I hope this was useful. Cheers!