In this article, we will take a look at fixing vSphere pods with ProviderFailed status. Following is an example:

svc-opa-gatekeeper-domain-c61 gatekeeper-controller-manager-5ccbc7fd79-5gn2n 0/1 ProviderFailed 0 2d14hWhen describing the pod, you can see the message "Unable to find backing for logical switch".

svc-opa-gatekeeper-domain-c61 gatekeeper-controller-manager-5ccbc7fd79-5jtvj 0/1 ProviderFailed 0 2d13h

svc-opa-gatekeeper-domain-c61 gatekeeper-controller-manager-5ccbc7fd79-5whtt 0/1 ProviderFailed 0 2d14h

svc-opa-gatekeeper-domain-c61 gatekeeper-controller-manager-5ccbc7fd79-6p2zv 0/1 ProviderFailed 0 2d13h

svc-opa-gatekeeper-domain-c61 gatekeeper-controller-manager-5ccbc7fd79-7r92p 0/1 ProviderFailed 0 2d14h

❯

❯ kd po gatekeeper-controller-manager-5ccbc7fd79-5gn2n -n svc-opa-gatekeeper-domain-c61

Name: gatekeeper-controller-manager-5ccbc7fd79-5gn2n

Namespace: svc-opa-gatekeeper-domain-c61

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: esx-1.sddc-35-82-xxxxx.xxxxxxx.com/

Labels: control-plane=controller-manager

gatekeeper.sh/operation=webhook

gatekeeper.sh/system=yes

pod-template-hash=5ccbc7fd79

Annotations: attachment_id: 668b681b-fef6-43e5-8009-5ac8deb6da11

kubernetes.io/psp: wcp-default-psp

mac: 04:50:56:00:08:1e

vlan: None

vmware-system-ephemeral-disk-uuid: 6000C297-d1ba-ce8c-97ba-683a3c8f5321

vmware-system-image-references: {"manager":"gatekeeper-111fd0f684141bdad12c811b4f954ae3d60a6c27-v52049"}

vmware-system-vm-moid: vm-89777:750f38c6-3b0e-41b7-a94f-4d4aef08e19b

vmware-system-vm-uuid: 500c9c37-7055-1708-92d4-8ffdf932c8f9

Status: Failed

Reason: ProviderFailed

Message: Unable to find backing for logical switch 03f0dcd4-a5d9-431e-ae9e-d796ddca0131: timed out waiting for the condition Unable to find backing for logical switch: 03f0dcd4-a5d9-431e-ae9e-d796ddca0131

IP:

IPs: <none>

> Connect-VIServer wdc-10-vc21To restart spherelet service on all ESXi worker nodes of a cluster:

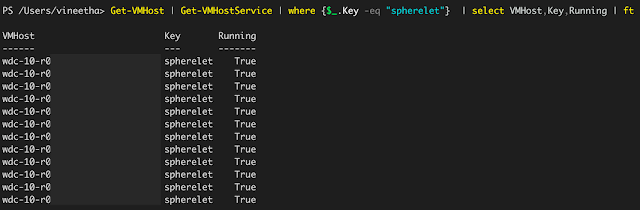

> Get-VMHost | Get-VMHostService | where {$_.Key -eq "spherelet"} | select VMHost,Key,Running | ft

VMHost Key Running

------ --- -------

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

wdc-10-r0xxxxxxxxxxxxxxxxxxxx spherelet True

> $sphereletservice = Get-VMHost wdc-10-r0xxxxxxxxxxxxxxxxxxxx | Get-VMHostService | where {$_.Key -eq "spherelet"}

> Stop-VMHostService -HostService $sphereletservice

Perform operation?

Perform operation Stop host service. on spherelet?

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is "Y"): Y

Key Label Policy Running Required

--- ----- ------ ------- --------

spherelet spherelet on False False

> Get-VMHost wdc-10-r0xxxxxxxxxxxxxxxxxxxx | Get-VMHostService | where {$_.Key -eq "spherelet"}

Key Label Policy Running Required

--- ----- ------ ------- --------

spherelet spherelet on False False

> Start-VMHostService -HostService $sphereletservice

Key Label Policy Running Required

--- ----- ------ ------- --------

spherelet spherelet on True False

> Get-Cluster

Name HAEnabled HAFailover DrsEnabled DrsAutomationLevel

Level

---- --------- ---------- ---------- ------------------

wdc-10-vcxxc01 True 1 True FullyAutomated

> Get-Cluster -Name wdc-10-vcxxc01 | Get-VMHost | foreach { Restart-VMHostService -HostService ($_ | Get-VMHostService | where {$_.Key -eq "spherelet"}) }

kubectl get pods -A | grep ProviderFailed | awk '{print $2 " --namespace=" $1}' | xargs kubectl delete podHope it was useful. Cheers!