In this post, I will explain the steps to expand an existing vROps installation and enable high availability.

Before making a design/ architecture decision on enabling high availability for vROps I strongly recommend you to go through the below VMware vROps documentation links and understand the functional/ technical implications of it.

- About vRealize Operations Manager High Availability

- High Availability Considerations

- About vRealize Operations Manager Cluster Nodes

Expand an existing installation

Deploy a new vROps appliance. Once the deployment is complete, open the management IP of the appliance in a web browser. This time select expand an existing installation.

Click next.

Provide a name for this new node.

Select the node type as "Data".

Provide the IP address or FQDN of the master node and click validate.

Accept the certificate and click next.

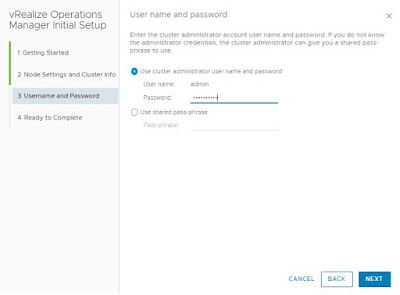

Provide admin password and click next.

Note: If you don't know the admin password you can request the vROps admin to provide a shared pass-phrase and can use it.

Click finish.

After this step, you will be redirected automatically to the admin page.

As you can see in the above screenshot, installation is in progress and is waiting to finish the cluster expansion. It may take a few minutes. Once it is complete you can see a button to "Finish adding new node(s)".

Click "Finish adding new node(s)" and click ok.

This will take a few minutes.

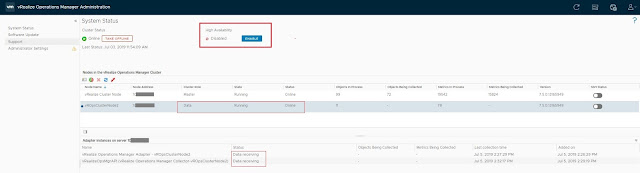

Now, you can see that the new data node is online and running. Next step is to enable high availability.

Enable High Availability

To configure high availability, click "Enable".

Cluster Restart Required: The cluster needs to be restarted in order to configure HA. This may require up to 20 minutes during which vRealize Operations Manager will not be available.

To ensure complete protection the two nodes (Master and Replica) should not share hardware.

Click Ok.

Click "Yes" to continue HA configuration.

This will take a few minutes. The cluster will be taken offline for enabling HA.

After a few minutes, the cluster is back online and HA is enabled. And as you can see in the below screenshot one node is "Master" and the other one is "Master Replica".

vROps also has dashboards that provide you details on the health status of the complete vROps environment, cluster statistics and performance details of vROps itself, etc. Sample screenshot of the "Self Health" dashboard is shown below.