Finally I managed to write some PowerShell code as I am completely inspired by my new PS geek friends. The scripts can be used to generate load and stress test your S2D as well as traditional Hyper-V 2016 cluster. These are functionally similar to VM Fleet. There are 7 scripts in total.

- create_clustered_testvms.ps1 : this script creates virtual machines on the cluster nodes which will be used for stress testing

- start_all_testvms.ps1 : start all those clustered VMs that you just created

- io_stress_trigger.ps1 : to trigger IO stress on all VMs using diskspd

- rebalance_all_testvms.ps1 : this script is originally from winblog, I just made a small change so that it will use live migration while moving the clustered VMs back to their owner node

- watch_iops_live.ps1 : to view read, write and total iops of each CSV disk on the S2D cluster

- stop_all_testvms.ps1 : to shutdown all the clustered VMs

- wipeoff_testvms.ps1 : to delete all VMs that you created using the first script

PS Version which I am using is given below.

Now I will explain briefly about how to use these scripts and a few prerequisites. Say, you have a 4 node hyper-converged S2D cluster.

As there are 4 nodes , you should have 4 cluster shared volumes (CSV). Assign one CSV to each cluster node as shown below. This means when you create VMs on NODE-01, it will be placed on CSV Volume AA, for NODE-02 VMs will be placed on Volume BB and so on.

Volume AA is C:\ClusterStorage\Volume1

Similarly,

Volume BB is C:\ClusterStorage\Volume2

Volume CC is C:\ClusterStorage\Volume3

Volume DD is C:\ClusterStorage\Volume4

Your cluster shared volumes are ready now. Create 2 folders inside Volume1 as shown below.

Copy all the 7 PS scripts to scripts folder. Code of each script is given at the end.

You now need a template VHDX and that needs to be copied to template folder.

Note: It should be named as "template"

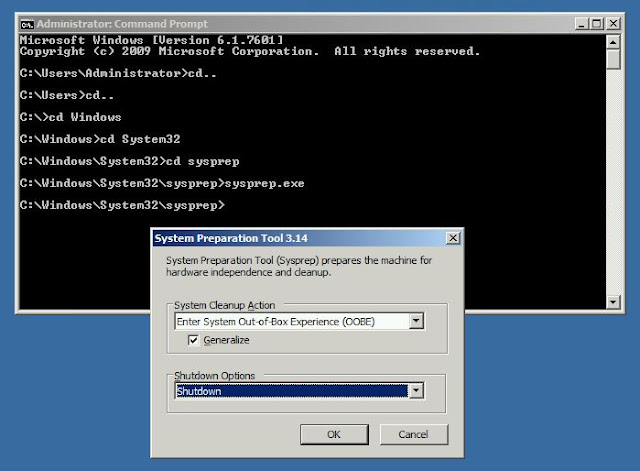

This template is nothing but a Windows Server 2016 VM created on a dynamically expanding disk. So you just have to create a VM with dynamically expanding VHDX, install Windows Server 2016 and set local administrator password to "Pass1234". Download diskspd from

Microsoft, unzip it and just copy the diskspd.exe to C drive of the VM you just created.

Shutdown the VM. No need to sysprep it. Copy the VHDX disk of the VM to template folder and rename it to "template". The disk will be around 9.5 GB in size. Once the template is copied, you are all set to start.

Step 1:

Run create_clustered_testvms.ps1

This will create clustered testvms on each of the nodes. It will be done in such a way that VMs on NODE-01 will be stored on Volume1, VMs on NODE-02 will be stored on Volume2 and so on

Step 2:

Run start_all_testvms.ps1

This will start all the testvms

Step 3:

Wait for a few seconds to ensure all the testvms are booted properly; then run io_stress_trigger.ps1 and provide necessary input parameters

Step 4:

You can watch IOPS of the cluster using watch_iops_live.ps1

If you would like to live migrate some testvms while the stress test is running you can try it and observe the IO variations. But before running the io_stress_trigger.ps1 again you have to move/ migrate all those testvms back to their preferred owners. This can be done using rebalance_all_testvms.ps1 .

If any testvms are not running on their preferred owner, then io_stress_trigger.ps1 will fail for those VMs. Here, testvms running on NODE-01 has preferred owner NODE-01, similarly for all other testvms. So you have to make sure all the testvms are running on their preferred owner before starting io stress script.

Use

stop_all_testvms.ps1 to shutdown all the clustered testvms that you created on step 1. To delete all the testvms, you can use wipeoff_testvms.ps1 .NOTE: While running scripts 2,3,6 and 7 please make sure all the testvms are running on their preferred owner. Use rebalance_all_testvms.ps1 to assign all testvms back to its preferred owner! Also please run all these scripts on PowerShell with elevated privileges after directly logging into any of the cluster nodes.

All codes given below. It might not be optimal but I am pretty sure it works! Cheers !

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_create_clustered_testvms.ps1#Get cluster info$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

$Node_count = (Get-ClusterNode).count

#Input VM config$VM_count = Read-Host "Enter number of VMs/ node"

$Cluster_VM_count = $VM_count*$Node_count

[int64]$RAM = Read-Host "Enter memory for each VM in MB Eg: 4096"

$RAM = 1MB*$RAM

$CPU = Read-Host "Enter CPU for each VM"

#Creds to Enter-PSSession$pass = convertto-securestring -asplaintext -force -string Pass1234

$cred = new-object -typename system.management.automation.pscredential -argumentlist "administrator", $pass

#Loop for each node in clusterfor($i=1; $i -le $Node_count; $i++){

$VM_path = "C:\ClusterStorage\Volume$i"

$Node = $Nodes_name[$i-1]

#Remote session to each node $S1 = New-PSSession -ComputerName $Node -Credential $cred

#Loop for creating new testvms on each node for($j=1; $j -le $VM_count; $j++){

$VM_name = "testvm-$Node-$j"

new-vm -name $VM_name -computername $Node -memorystartupbytes $RAM -generation 2 -Path $VM_path

set-vm -name $VM_name -ProcessorCount $CPU -ComputerName $Node

New-Item -path $VM_path\$VM_name -name "Virtual Hard Disks" -type directory

#Copy template disk Copy-Item "C:\ClusterStorage\Volume1\template\template.vhdx" -Destination "$VM_path\$VM_name\Virtual Hard Disks" -Verbose

Add-VMHardDiskDrive -VMName $VM_name -ComputerName $Node -path "$VM_path\$VM_name\Virtual Hard Disks\template.vhdx" -Verbose

#Create new fixed test disk New-VHD -Path "$VM_path\$VM_name\Virtual Hard Disks\test_disk.vhdx" -Fixed -SizeBytes 40GB

Add-VMHardDiskDrive -VMName $VM_name -ComputerName $Node -path "$VM_path\$VM_name\Virtual Hard Disks\test_disk.vhdx" -Verbose

Get-VM -ComputerName $Node -VMName $VM_name | Start-VM

Add-ClusterVirtualMachineRole -VirtualMachine $VM_name

Set-ClusterOwnerNode -Group $VM_name -owner $Node

Start-Sleep -S 10

#Remote session to each testvm on the node to initialize and format test disk (drive D:) Invoke-Command -Session $S1 -ScriptBlock {param($VM_name2,$cred2) Invoke-Command -VMName $VM_name2 -Credential $cred2 -ScriptBlock {

Initialize-Disk -Number 1 -PartitionStyle MBR

New-Partition -DiskNumber 1 -UseMaximumSize -DriveLetter D

Get-Volume | where DriveLetter -eq D | Format-Volume -FileSystem NTFS -NewFileSystemLabel Test_disk -confirm:$false

}} -ArgumentList $VM_name,$cred

Start-Sleep -S 5

Get-VM -ComputerName $Node -VMName $VM_name | Stop-VM -Force

}

}

#END_create_clustered_testvms.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_start_all_testvms.ps1#Get cluster info$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

$Node_count = (Get-ClusterNode).count

for($i=1; $i -le $Node_count; $i++){

$Node = $Nodes_name[$i-1]

Get-VM -ComputerName $Node -VMName "testvm-$Node*" | Start-VM -AsJob

}

#END_start_all_testvms.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_io_stress_trigger.ps1#Get cluster info$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

$Node_count = (Get-ClusterNode).count

#Creds to Enter-PSSession$pass = convertto-securestring -asplaintext -force -string Pass1234

$cred = new-object -typename system.management.automation.pscredential -argumentlist "administrator", $pass

$time = Read-Host "Enter duration of stress in seconds (Eg: 300)"

$block_size = Read-Host "Enter block size (Eg: 4K)"

$writes = Read-Host "Enter write percentage (Eg: 20)"

$OIO = Read-Host "Enter number of outstanding IOs (Eg: 16)"

$threads = Read-Host "Enter number of threads (Eg: 2)"

#Loop for each node in clusterfor($i=1; $i -le $Node_count; $i++){

$VM_path = "C:\ClusterStorage\Volume$i"

$Node = $Nodes_name[$i-1]

#Remote session to each node $S1 = New-PSSession -ComputerName $Node -Credential $cred

$VM_count = (Get-VM -ComputerName $Node -VMName "testvm-$Node*").Count

#Loop for creating new testvms on each node for($j=1; $j -le $VM_count; $j++){

$VM_name = "testvm-$Node-$j"

#Remote session to each testvm Invoke-Command -Session $S1 -ScriptBlock {param($VM_name2,$cred2,$time1,$block_size1,$writes1,$OIO1,$threads1) Invoke-Command -VMName $VM_name2 -Credential $cred2 -ScriptBlock {param($time2,$block_size2,$writes2,$OIO2,$threads2)

C:\diskspd.exe -"b$block_size2" -"d$time2" -"t$threads2" -"o$OIO2" -h -r -"w$writes2" -L -Z500M -c38G D:\io_stress.dat

} -AsJob -ArgumentList $time1,$block_size1,$writes1,$OIO1,$threads1 } -ArgumentList $VM_name,$cred,$time,$block_size,$writes,$OIO,$threads

}

}

#END_io_stress_trigger.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_rebalance_all_testvms.ps1$clustergroups = Get-ClusterGroup | Where-Object {$_.IsCoreGroup -eq $false}

foreach ($cg in $clustergroups)

{

$CGName = $cg.Name

Write-Host "`nWorking on $CGName"

$CurrentOwner = $cg.OwnerNode.Name

$POCount = (($cg | Get-ClusterOwnerNode).OwnerNodes).Count

if ($POCount -eq 0)

{

Write-Host "Info: $CGName doesn't have a preferred owner!" -ForegroundColor Magenta

}

else

{

$PreferredOwner = ($cg | Get-ClusterOwnerNode).Ownernodes[0].Name

if ($CurrentOwner -ne $PreferredOwner)

{

Write-Host "Moving resource to $PreferredOwner, please wait..."

$cg | Move-ClusterVirtualMachineRole -MigrationType Live -Node $PreferredOwner

}

else

{

write-host "Resource is already on preferred owner! ($PreferredOwner)"

}

}

}

Write-Host "`n`nFinished. Current distribution: "

Get-ClusterGroup | Where-Object {$_.IsCoreGroup -eq $false}

#END_rebalance_all_testvms.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_watch_iops_live.ps1

#Get cluster info$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

while($true)

{

[int]$total_IO = 0

[int]$total_readIO = 0

[int]$total_writeIO = 0

clear

"{0,-15} {1,-15} {2,-15} {3,-15} {4, -15} {5, -15}" -f "Host", "Total IOPS", "Reads/Sec", "Writes/Sec", "Read Q Length", "Write Q Length"

for($j=1; $j -le $Nodes_name.count; $j++){

$Node = $Nodes_name[$j-1]

$Data = Get-CimInstance -ClassName Win32_PerfFormattedData_CsvFsPerfProvider_ClusterCSVFS -ComputerName $Node | Where Name -like Volume$j

[int]$T = $Data.ReadsPerSec+$Data.WritesPerSec

"{0,-15} {1,-15} {2,-15} {3,-15} {4,-15} {5, -15}" -f "$Node", "$T", $Data.ReadsPerSec, $Data.WritesPerSec, $Data.CurrentReadQueueLength, $Data.CurrentWriteQueueLength

$total_IO = $total_IO+$T

$total_readIO = $total_readIO+$Data.ReadsPerSec

$total_writeIO = $total_writeIO+$Data.WritesPerSec

}

echo `n

"{0,-15} {1,-15} {2,-15} {3,-15} " -f "Cluster IOPS", "$total_IO", "$total_readIO", "$total_writeIO"

Start-Sleep -Seconds 3

}

#END_watch_iops_live.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_stop_all_testvms.ps1

#Get cluster info

$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

$Node_count = (Get-ClusterNode).count

for($i=1; $i -le $Node_count; $i++){

$Node = $Nodes_name[$i-1]

Get-VM -ComputerName $Node -VMName "testvm-$Node*" | Stop-VM -Force -AsJob

}

#END_stop_all_testvms.ps1

--------------------------------------------------------------------------------------------------------------------------

#BEGIN_wipeoff_testvms.ps1

#Get cluster info

$Cluster_name = (Get-Cluster).name

$Nodes_name = (Get-ClusterNode).name

$Node_count = (Get-ClusterNode).count

#Loop for each node in cluster

for($i=1; $i -le $Node_count; $i++){

$VM_path = "C:\ClusterStorage\Volume$i"

$Node = $Nodes_name[$i-1]

$VM_count = (get-vm -ComputerName $Node -Name "testvm-$Node-*").Count

#Loop to delete testvm on each node

for($j=1; $j -le $VM_count; $j++){

$VM_name = "testvm-$Node-$j"

$full_path = "$VM_path\$VM_name"

Get-VM -Computername $Node -VMname $VM_name | stop-vm -force

Get-ClusterGroup $VM_name | Remove-ClusterGroup -Force -RemoveResources

Get-VM -Computername $Node -VMname $VM_name | remove-vm -force

Remove-Item $full_path -Force -Recurse -ErrorAction SilentlyContinue -Verbose

}

}

#END_wipeoff_testvms.ps1

--------------------------------------------------------------------------------------------------------------------------