In this post, we will take a look at the different configuration steps that are required before enabling workload management to establish connectivity to the supervisor cluster, supervisor namespaces, and all objects that run inside the namespaces, such as vSphere pods, and Tanzu Kubernetes Clusters.

At this point, I assume that the vSAN cluster is up and NSX-T 3.0 is installed. NSX-T appliance is connected to the same management network where the VCSA and ESXi nodes are connected. In my case, it will be through VLAN 41. Note that all the ESXi nodes of the vSAN cluster are connected to one vSphere Distributed Switch and has two uplinks from each node that connects to TOR A and TOR B.

NSX-T configurations

- Add Compute Managers. I've added the vCenter server here.

- Add Uplink Profiles.

- Create a host uplink profile "nsx-uplink-profile-lbsrc" (this is for host TEP using VLAN 52).

- Create an edge uplink profile "nsx-edge-uplink-profile-lbsrc" (this is for edge TEP using VLAN 53).

- Add Transport Zones.

- Create an overlay transport zone "tz-overlay".

- Create a VLAN transport zone "edge-vlan-tz".

- Add IP Address Pools.

- Create an IP address pool for host TEPs "TEP-Pool-01" (this is for host TEP using VLAN 52).

- Create an IP address pool for edge TEPs "Edge-TEP-Pool-01" (this is for Edge TEP using VLAN 53).

- Add Transport Node Profiles.

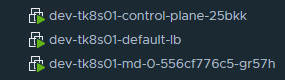

- Configure Host Transport Nodes. Select the required cluster and click configure NSX to convert all the ESXi nodes as transport nodes.