In this post, we will take a look at the different resource kinds that are part of the Dell EMC PowerFlex Management Pack. Following is a very high-level logical representation of the PowerFlex Adapter resource kinds and their relationships:

Sunday, November 8, 2020

Dell EMC PowerFlex MP for vROps 8.x - Part4 - Resource kinds and relationships

Part1 - Install

Wednesday, November 4, 2020

Dell EMC PowerFlex MP for vROps 8.x - Part3 - Dashboards

- PowerFlex System Overview

- PowerFlex Manager Details

- PowerFlex Management Controller

- PowerFlex ESXi Cluster Usage

- PowerFlex ESXi Host Usage

- PowerFlex SVM Utilization

- PowerFlex Networking Environment

- PowerFlex Networking Performance

- PowerFlex Summary

- PowerFlex Details

- PowerFlex Replication Details

- PowerFlex Node Summary

- PowerFlex Node Details

PowerFlex Node Summary

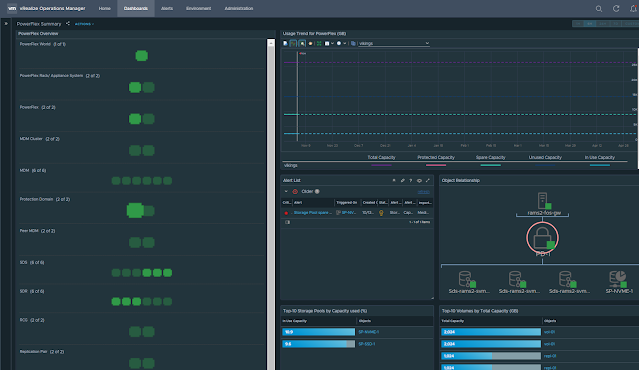

PowerFlex Summary

References

Monday, November 2, 2020

Dell EMC PowerFlex MP for vROps 8.x - Part2 - Configure

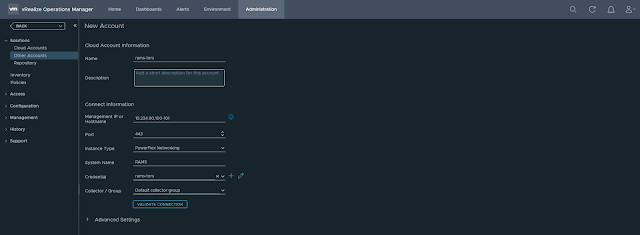

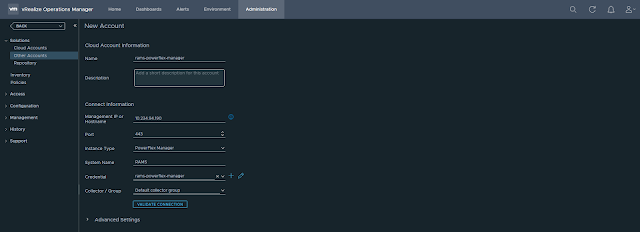

In this post, I will explain how to configure the PowerFlex Management Pack for vROps.

Before getting into the configuration, I would like to provide a high-level view of my lab setup. I have two separate PowerFlex rack systems that I will be monitoring using the management pack. The two systems are named RAMS and VIKINGS and have the following components.

- PowerFlex Networking - queries and collects networking details from Cisco switches

- PowerFlex Gateway - queries and collects storage details from PowerFlex Gateway

- PowerFlex Nodes - queries and collects server hardware health details from iDRACs

- PowerFlex Manager - queries and collects service deployment details from PowerFlex Manager

PowerFlex Networking

Let's configure the account for monitoring Cisco TOR switches of the RAMS cluster.

Provide the following details:

- Name

- Management IP address of Cisco TOR switches

Select the instance type as "PowerFlex Networking" and provide a system name.

In this case, these TOR switches are part of RAMS. So I have given the system name as RAMS.

Click ADD to save the account. You will see the account we just created under the other accounts page.

Initially, the status will be warning but it will turn to OK in few seconds.

PowerFlex Gateway

PowerFlex Nodes

PowerFlex Manager

References

Friday, October 30, 2020

Dell EMC PowerFlex MP for vROps 8.x - Part1 - Install

Related posts

Part2 - Configure

Part3 - Dashboards

Part4 - Resource kinds and relationships

References

Friday, October 23, 2020

VMware PowerCLI 101 - part8 - Working with vSAN

This article explains how to work with vSAN resources using PowerCLI.

Note I am using the following versions:

PowerShell: 5.1.14393.3866

VMware PowerCLI: 12.1.0.17009493

Connect to vCenter:

Connect-VIServer <IP of vCenter server>

List all vSAN get cmdlets:

Get-Command Get-Vsan*

vSAN runtime info:

$c = Get-Cluster Cluster01

Get-VsanRuntimeInfo -Cluster $c

vSAN space usage:

Get-VsanSpaceUsage

vSAN cluster configuration:

Related posts

VMware PowerCLI 101 - Part1 - Installing the module and working with stand-alone ESXi host

VMware PowerCLI 101 - Part2 - Working with vCenter server

VMware PowerCLI 101 - Part3 - Basic VM operations

VMware PowerCLI 101 - Part4 - Snapshots

VMware PowerCLI 101 - Part5 - Real time storage IOPS and latency

VMware PowerCLI 101 - part6 - vSphere networking

VMware PowerCLI 101 - part7 - Working with vROps

Saturday, June 30, 2018

Introduction to Nutanix cluster components

|

| Nutanix cluster components |

- Stargate: Data I/O manager for the cluster.

- Medusa: Access interface for Cassandra.

- Cassandra: Distributed metadata store.

- Curator: Handles Map Reduce cluster management and cleanup.

- Zookeeper: Manages cluster configuration.

- Zeus: Access interface for Zookeeper.

- Prism: Management interface for Nutanix UI, nCLI and APIs.

- Responsible for all data management and I/O operations.

- It is the main point of contact for a Nutanix cluster.

- Workflow: Read/ write from VM < > Hypervisor < > Stargate.

- Stargate works closely with Curator to ensure data is protected and optimized.

- It also depends on Medusa to gather metadata and Zeus to gather cluster configuration data.

- Medusa is the Nutanix abstraction layer that sits infront of DB that holds the cluster metadata.

- Stargate and Curator communicates to Cassandra through Medusa.

- It is a distributed high performance and scalable DB.

- It stores all metadata about all VMs stored in a Nutanix datastore.

- It needs verification of atleast one other Cassandra node to commit its operations.

- Cassandra depends on Zeus for cluster configuration.

- Curator constantly access the environment and is responsible for managing and distributing data throughout the cluster.

- It does disk balancing and information life cycle management.

- It is elected by a Curator master node who manages the task and job delegation.

- Master node coordinates periodic scans of the metadata DB and identifies cleanup and optimization tasks tat Stargate or other components should perform.

- It is also responsible for analyzing the metadata, this is shared across all Curator nodes using a Map Reduce algorithm.

- It runs on 3 nodes in the cluster.

- It can be increased to 5 nodes of the cluster.

- Zookeeper coordinates and distributes services.

- One is elected as leader.

- All Zookeeper nodes can process reads.

- Leader is responsible for cluster configuration write requests and forwards to its peers.

- If leader fails to respond, a new leader is elected.

- Zeus is the Nutanix library interface which all other components use to access cluster configuration information.

- It is responsible for cluster configuration and leadership logs.

- If Zeus goes down, all goes down!

- Prism is the central entity of viewing activity inside the cluster.

- It is the management gateway for administrators to configure and monitor a Nutanix cluster.

- It also elects a node.

- Prism depends on data stored in Zookeeper and Cassandra.

Wednesday, May 30, 2018

Creating HTML report of ScaleIO cluster using PowerShell

Use case: This script can be used/ leveraged as part of daily cluster health/ stats reporting process, or something similar; so that monitoring Engineers or whoever responsible can have a look at it on a daily basis to make sure everything is healthy and working normal.

Related references:

Hope this was helpful. Cheers!

Thursday, November 30, 2017

Software Defined Storage using ScaleIO

- SDC - ScaleIO Data Client

- SDS - ScaleIO Data Server

- MDM - Meta Data Manager

In this scenario, all 5 nodes have ESXi installed and clustered. All nodes have local hard disks present in them. And its the responsibility of ScaleIO software to pool all the hard disks from all 5 nodes forming a distributed virtual SAN.

SDC is a light weight driver which is responsible for presenting LUNs provisioned from the ScaleIO system. SDS is responsible for managing local disks present in each node. MDM contains all the metadata required for system operation and configuration changes. It manages the metadata, SDC, SDS, system capacity, device mappings, volumes, data protection, errors/ failures, rebuild and rebalance operations etc. ScaleIO supports 3 node/ 5 node MDM cluster. Above figure shows a 5 node MDM cluster, where there will be 3 manager MDMs and out of which one will be master and two will be slaves and there will be two Tie-Breaker (TB) which helps in deciding master MDM by maintaining a majority in the cluster. In a production environment with 5 or more nodes, it is recommended to use a 5 node MDM cluster as it can tolerate 2 MDM failures.

ScaleIO uses a distributed two way mesh mirror scheme to protect data against disk or node failures. To ensure QoS it has the capability where you can limit bandwidth as well as IOPS for each volume provisioned from a ScaleIO cluster. And regarding scalability a single ScaleIO cluster supports upto 1024 nodes. In very large ScaleIO deployments it is highly recommended to configure separate protection domains and fault sets to minimize the impact of multiple failures at the same time.

You can download ScaleIO software for free to test and play around in your lab environment.

References:

Dell EMC ScaleIO Basic Architecture

Dell EMC ScaleIO Design Considerations And Best Practices

Dell EMC ScaleIO Ready Node

Friday, April 28, 2017

Storage Spaces Direct - Volumes and Resiliency

Mirror

- Recommended for workloads that have strict latency requirements or that need lots of mixed random IOPS

- Eg: SQL Server databases or performance-sensitive Hyper-V VMs

- If you have a 2 node cluster: Storage Spaces Direct will automatically use two-way mirroring for resiliency

- If your cluster has 3 nodes: it will automatically use three-way mirroring

- Three-way mirror can sustain two fault domain failures at same time

- You can create two-way mirror by mentioning "PhysicalDiskRedundancy 1"

Parity

- Recommended for workloads that write less frequently, such as data warehouses or "cold" storage, traditional file servers, VDI etc.

- For creating dual parity volumes min 4 nodes are required and can sustain two fault domain failures at same time

- You can create single parity volumes using the below

- In Windows Server 2012 R2 Storage Spaces, when you create storage tiers you dedicated physical media devices. That means SSD for performance tier and HDD for capacity tier

- But in Windows Server 2016, tiers are differentiated not only by media types; it can include resiliency types too

- MRV = Three-way mirror + dual-parity

- In a MRV, three-way mirror portion is considered as performance tier and dual parity portion as capacity tier

- Recommended for workloads that write in large, sequential, such as archival or backup targets

- Writes land to mirror section of the volume and then it is gradually moved/ rotated in to parity portion later

- Each MRV by default will have 32 MB Write-back cache

- ReFS starts rotating data into the parity portion at 60% utilization of the mirror portion and gradually as utilization increases the speed of data movement to parity portion also increases

- You should have min 4 nodes to create a MRV