Important note: This is officially NOT supported by vROps. I've had a specific one off use case in my lab. It is just a quick workaround and is not recommended in production environment as this solution is not supported by VMware.

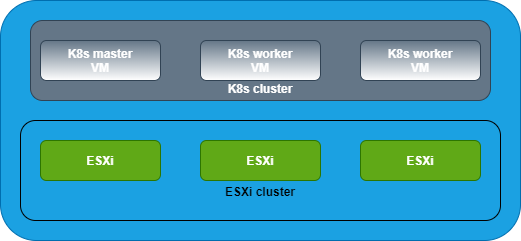

This article explains how to add multiple network interfaces to a vROps 8.0 and 8.1 appliance. Recently we had a scenario where the vROps appliance needs access to different networks that are isolated/ not routed with the primary network management interface of vROps. In my case, the vROps instance needed access to 3 different networks.

Initially while installing the vROps there will be only one interface (10-eth0.network) and its the default interface for vROps appliance.

For configuring additional interfaces follow the steps below:

- Add a network card and connect to the respective port group by editing VM settings

- Login to vROps with root creds

- cd /etc/systemd/network/

- Create an entry for the new interface 10-eth1.network (as it will not be present!)

- vi 10-eth1.network

- Provide all necessary IP details and save

- Restart network service systemctl restart systemd-networkd

- Verify /opt/vmware/share/vami/vami_config_net

- Similarly, follow the above steps if you require more interfaces

Note: This solutions is NOT officially supported by vROps. It is not recommended in production environment.

Related post

References