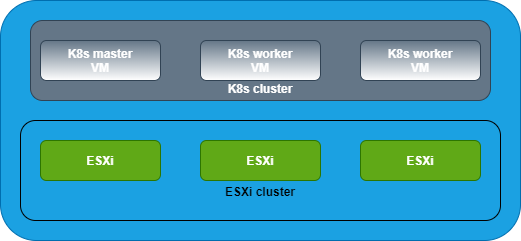

This article explains how to work with vROps resources using PowerCLI. The following diagram shows the relationship between adapters, resource kinds, and resources. There can be multiple adapters installed on the vROps instance. Each adapter kind will have multiple resource kinds and each resource kind will have multiple resources. And each resource will have its own badges and badge scores.

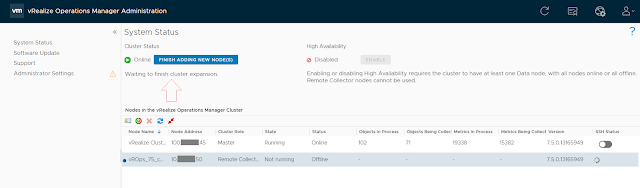

Note I am using the following versions:

PowerShell: 5.1.14393.3383

VMware PowerCLI: 11.3.0.13990089

vROps: 7.0

Connect to vROps:

Connect-OMServer <IP of vROps>

Get the list of all installed adapters:

Get-OMResource | select AdapterKind -Unique

Get all resource kinds of a specific adapter:

Get-OMResource -AdapterKind VMWARE | select ResourceKind -Unique

Get the list of resources of a specific resource kind:

Get-OMResource -ResourceKind Datacenter

Another example:

Get-OMResource -ResourceKind ClusterComputeResource

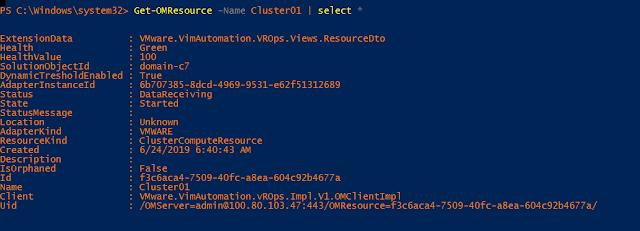

Get details of a specific resource:

Get-OMResource -Name Cluster01 | select *

Get badge details of a selected resource:

(Get-OMResource -Name Cluster01).ExtensionData.Badges

List all resources of an adapter kind where health is not green:

Get-OMResource -AdapterKind VMWARE | select AdapterKind,ResourceKind,Name,Health,State,Status | where health -ne Green | ft

Get-OMResource -AdapterKind VMWARE | select AdapterKind,ResourceKind,Name,Health,State,Status | where {($_.Status -ne "DataReceiving") -or ($_.State -ne "Started")} | ft

Get the list of all active critical alerts from a specific adapter type:

Get-OMResource -AdapterKind VMWARE | Get-OMAlert -Criticality Critical -Status Active

Hope it was helpful. Cheers!

Related posts