In the previous posts we discussed the following:

Part1: Prerequisites

Part2: Configure NSX-T

Part3: Edge Cluster

Configure Tier-0 Gateway

- Add Segments.

- Create a segment "ls-uplink-v54"

- VLAN: 54

- Transport Zone: "edge-vlan-tz"

- Create a segment "ls-uplink-v55"

- VLAN: 55

- Transport Zone: "edge-vlan-tz"

- Add Tier-0 Gateway.

- Provide the necessary details as shown below.

- Add 4 interfaces and configure them as per the logical diagram given above.

- edge-01-uplink1 - 192.168.54.254/24 - connected via segment ls-uplink-v54

- edge-01-uplink2 - 192.168.55.254/24 - connected via segment ls-uplink-v55

- edge-02-uplink1 - 192.168.54.253/24 - connected via segment ls-uplink-v54

- edge-02-uplink2 - 192.168.55.253/24 - connected via segment ls-uplink-v55

- Verify the status is showing success for all the 4 interfaces that you added.

- Routing and multicast settings of T0 are as follows:

- You can see a static route is configured. The next hop for the default route 0.0.0.0/0 is set to 192.168.54.1.

- The next hop configuration is given below.

- BGP settings of T0 are shown below.

- BGP Neighbor config:

- Verify the status is showing success for the two BGP Neighbors that you added.

- Route re-distribution settings of T0:

- Add route re-distribution.

- Set route re-distribution.

Configure TOR Switches

---On TOR A---

conf

router bgp 65500

neighbor 192.168.54.254 remote-as 65400 #peering to T0 edge-01 interface

neighbor 192.168.54.254 no shutdown

neighbor 192.168.54.253 remote-as 65400 #peering to T0 edge-02 interface

neighbor 192.168.54.253 no shutdown

neighbor 192.168.54.3 remote-as 65500 #peering to TOR B in VLAN 54

neighbor 192.168.54.3 no shutdown

maximum-paths ebgp 4

maximum-paths ibgp 4

---On TOR B---

conf

router bgp 65500

neighbor 192.168.55.254 remote-as 65400 #peering to T0 edge-01 interface

neighbor 192.168.55.254 no shutdown

neighbor 192.168.55.253 remote-as 65400 #peering to T0 edge-02 interface

neighbor 192.168.55.253 no shutdown

neighbor 192.168.54.2 remote-as 65500 #peering to TOR A in VLAN 54

neighbor 192.168.54.2 no shutdown

maximum-paths ebgp 4

maximum-paths ibgp 4

---Advertising ESXi mgmt and VM traffic networks in BGP on both TORs---

conf

router bgp 65500

network 192.168.41.0/24

network 192.168.43.0/24

Thanks to my friend and vExpert Harikrishnan @hari5611 for helping me with the T0 configs and BGP peering on TORs. Do check out his blog https://vxplanet.com/.

Verify BGP Configurations

The next step is to verify the BGP configs on TORs using the following commands:

show running-config bgp

show ip bgp summary

show ip bgp neighbors

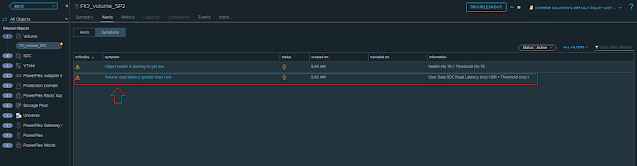

Follow the VMware documentation to verify the BGP connections from a Tier-0 Service Router. In the below screenshot you can see that both Edge nodes have the BGP neighbors 192.168.54.2 and 192.168.55.3 with state Estab.

In the next article, I will talk about adding a T1 Gateway, adding new segments for apps, connecting VMs to the segments, and verify connectivity to different internal and external networks. I hope this was useful. Cheers!